Adding Elasticsearch Integration to Pleroma

If you've moved to Akkoma, this guide is OUTDATED, refer to the Akkoma docs if you still want to do this

Pleroma search sucks. Let's face it, it sucks. Searching through an entire postgres table for matches isn't exactly efficient, and it's common that absolutely nothing relevent is returned by your query.

So I, being very cool and also the best, have fixed this. For adequately low values of "fixed". Basically my solution is to shove activities, users and hashtags into elasticsearch indices and using the extremely good search provided therein.

It works nicely, it's about 3-4s to search all indices and handles just about everything you'd want. Less specific queries can take a few seconds more, but they time out after 5s so it's not the end of the world.

So it is time to walk you through how to set it up. This will require no previous ES knowledge.

WARNING: Only do this if you are technically competent and don't mind potentially resolving merge conflicts down the line.

You will need:

- Pleroma from source (OTP will not work)

- A VPS

- An elasticsearch installation (it's probably in your distro's repo, else see here)

First, please sweet jesus apply a password to your ES. Find your elasticsearch.yml (probably in /etc/elasticsearch) and add:

network.host: 127.0.0.1

http.host: 127.0.0.1

xpack.security.enabled: true

Then run elasticsearch-setup-passwords (probably in /usr/share/elasticsearch/bin) to set your passwords.

Check that ES is running with curl -u username:password http://localhost:9200/

Now we can get down to the complex stuff.

We'll need to add my personal git as a remote so you can merge my branch. There's a PR open on the repository showing the changes against mainline's develop just so you can be sure I'm not backdooring you or anything.

cd /path/to/peroma

git remote add elastic-fork https://git.ihatebeinga.live/IHBAGang/pleroma.git

git fetch elastic-fork

git merge elastic-fork/feature/elasticsearch

This has now added my changes, hooray. Now we need to configure it.

In config/prod.secret.exs:

config :pleroma, :search, provider: Pleroma.Search.Elasticsearch

config :pleroma, :elasticsearch, url: "http://localhost:9200"

config :elastix,

shield: true,

username: "my-user",

password: "my-password"

Change the URL, username and password as requried.

Now we need to create some indices. This isn't strictly neccesary but we're going to.

curl -XPUT http://localhost:9200/activities/ -u username:password

curl -XPUT http://localhost:9200/activities/activity/_mapping\?include_type_name --data-binary @priv/es-mappings/activity.json -H "Content-Type: application/json" -u username:password

curl -XPUT http://localhost:9200/users/ -u username:password

curl -XPUT http://localhost:9200/users/user/_mapping\?include_type_name --data-binary @priv/es-mappings/user.json -H "Content-Type: application/json" -u username:password

curl -XPUT http://localhost:9200/hashtags/ -u username:password

curl -XPUT http://localhost:9200/hashtags/hashtag/_mapping\?include_type_name --data-binary @priv/es-mappings/hashtag.json -H "Content-Type: application/json" -u username:password

Cool. Nice. Ok data time. This will take a while.

export MIX_ENV=prod

mix deps.get

mix compile

mix pleroma.search import activities

mix pleroma.search import users

mix pleroma.search import hashtags

This will import all old data from your postgres db into our indices.

You can keep track of your progress by running

curl http://localhost:9200/activities/_count -u username:password

curl http://localhost:9200/users/_count -u username:password

curl http://localhost:9200/hashtags/_count -u username:password

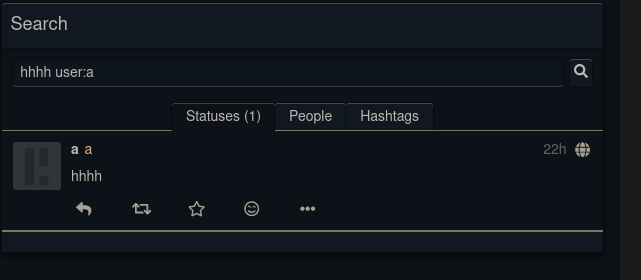

Now in theory you should be able to reboot your instance and have good, fast search.

Hooray.

Extra Info

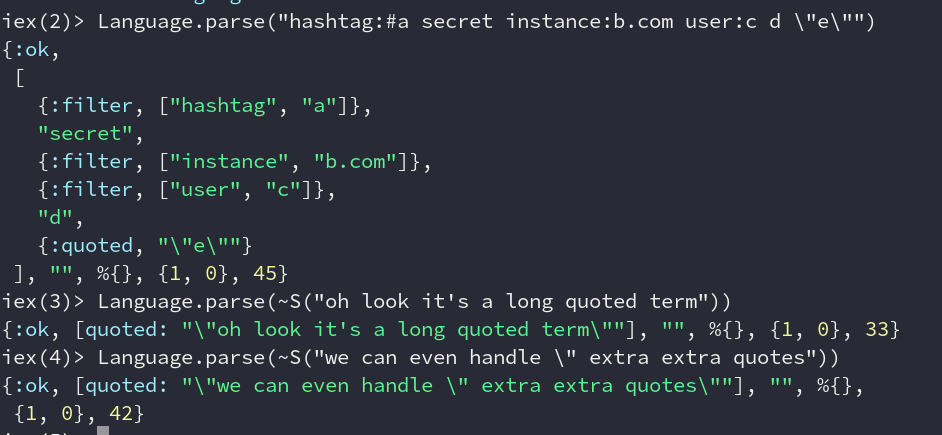

Supported filters:

hashtag:#someTagorhashtag:someTaguser:someUsernameinstance:someinstance.net

Also supports "quoted terms" and just random terms